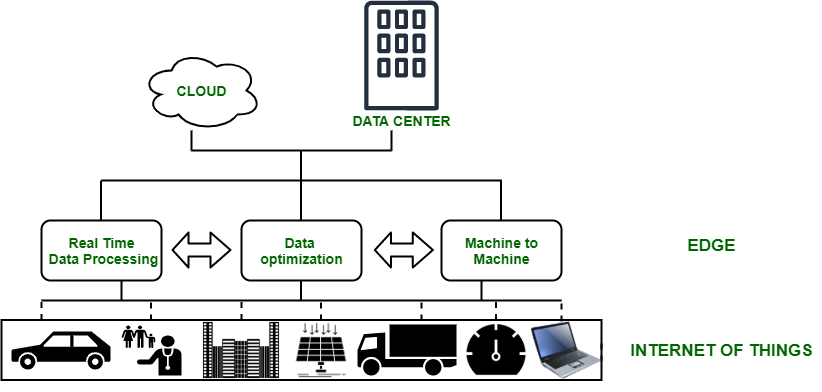

If their plans pan out, they seek to accomplish the following: Potential benefits The architects of edge computing would seek to add their design as a fourth category to this list: one that leverages the portability of smaller, containerized facilities with smaller, more modular servers, to reduce the distances between the processing point and the consumption point of functionality in the network. Cloud service providers, where customer infrastructure may be virtualized to some extent, and services and applications are provided on a per-use basis, enabling operations to be accounted for as operational expenses rather than capital expenditures.Colocation facilities, where customer equipment is hosted in a fully managed building where power, cooling, and connectivity are provided as services.On-premises, where data centers house multiple racks of servers, where they're equipped with the resources needed to power and cool them, and where there's dedicated connectivity to outside resources.There are three places most enterprises tend to deploy and manage their own applications and services: Inside a Schneider Electric micro data center cabinet Scott Fulton The current topology of enterprise networks And depending upon how many different types of service providers your organization has contracted with - public cloud applications providers (SaaS), apps platform providers (PaaS), leased infrastructure providers (IaaS), content delivery networks - there may be multiple tracts of IT real estate vying to be "the edge" at any one time. Because the Internet isn't built like the old telephone network, "closer" in terms of routing expediency is not necessarily closer in geographical distance. This is especially true for applications for logistics and large-scale manufacturing, as well as for the Internet of Things (IoT) where sensors or data collecting devices are numerous and highly distributed.ĭepending on the application, when either or both edge strategies are employed, these servers may actually end up on one end of the network or the other. Applications may be expedited when their processors are stationed closer to where the data is collected.Data streams, audio, and video may be received faster and with fewer pauses (preferably none at all) when servers are separated from their users by a minimum of intermediate routing points, or "hops." Content delivery networks (CDN) from providers such as Akamai, Cloudflare, and NTT Communications and are built around this strategy.

Furthermore, edge computing matters because it alleviates the burden placed on the cloud as the number data-generating IoT devices continues to increase.In a modern communications network designed for use at the edge - for example, a 5G wireless network - there are two possible strategies at work: These applications often require real-time data process and analysis, relying on the cloud is not possible because the time it takes data to travel thousands of miles from the origin device to the cloud and back often takes a few seconds, which is simply too long for applications that require real-time decision making. Edge computing matters because it enables these applications that would not otherwise be possible without edge computing. Some applications, such as autonomous vehicles, remote monitoring and control of machinery and equipment, surveillance and security, and factory automation. Rugged edge computing matters because it brings real-time, low latency compute and storage power closer to the source of data generation in harsh environments that regular, consumer-grade desktop PCs cannot survive.

0 kommentar(er)

0 kommentar(er)